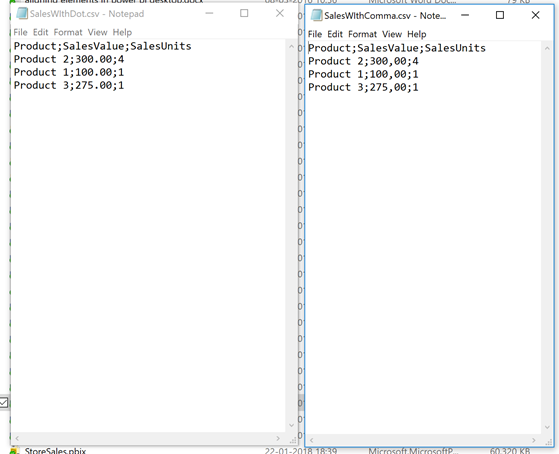

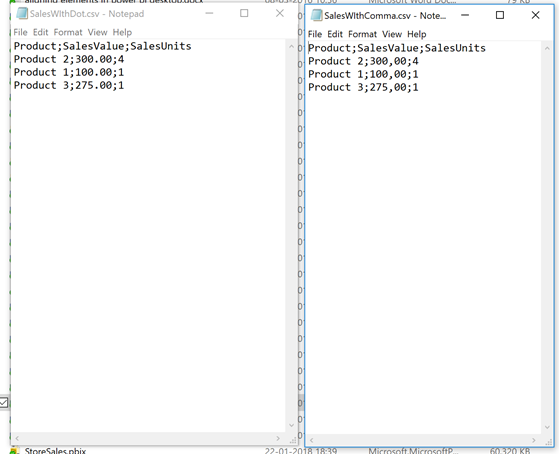

Recently I have been working on a project where the solution should import a csv file exported from a SQL server. For some reason sometimes the data comes with a , (comma) as the decimal separator and other times with . (dot) as the decimal separator.

This meant that when importing the different files, I had to find a way to dynamically change the culture code setting when importing the file.

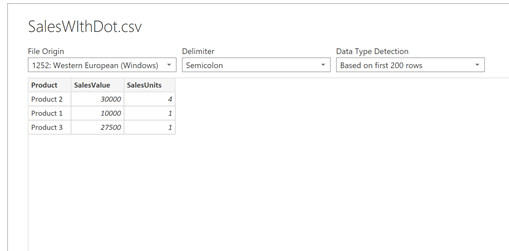

Let’s try and open the SalesWithDot.csv file in Power BI Desktop

As my Power BI Desktop uses Danish settings and danes uses , as a decimal separator Power BI desktop will think the values is 30.000 instead of 300 etc. – and we have to tell Power Query that the source is from another Culture – so I click Edit

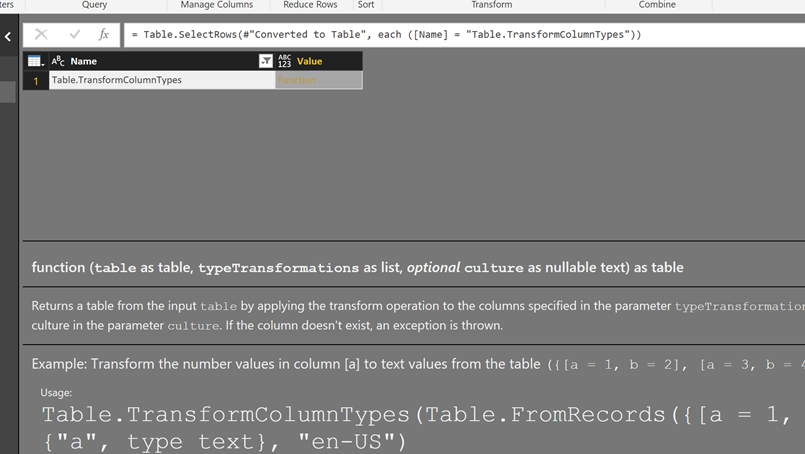

As we can see from the documentation Table.TransformColumnTypes – the function has an optional parameter called Culture –

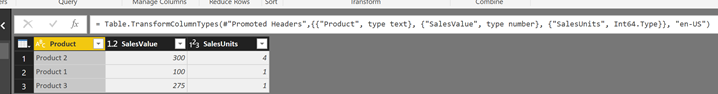

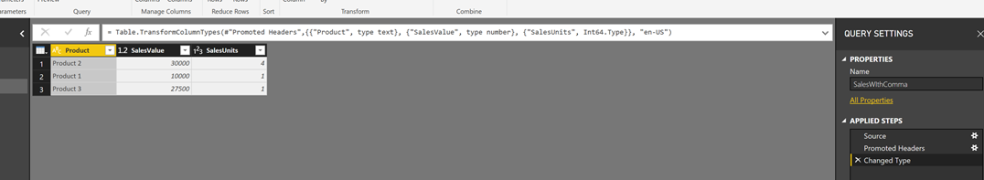

And by adding “en-US” to our formula bar we can have the right value in the SalesValue column

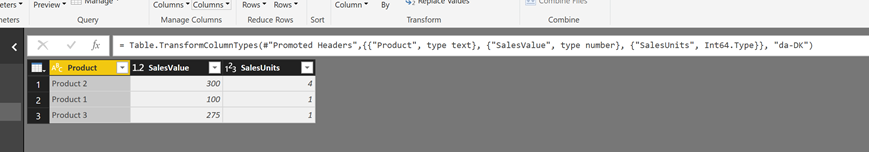

But when we use this when importing a file where the sales value is formatted with a , as the decimal separator and we use the same culture value (“en-US”) then we have the problem.

And by changing the culture to da-DK it shows the right values

So how can we make Power Query dynamically determine which local to use ?

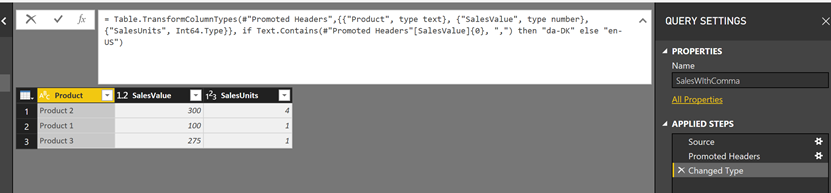

In this case I know that the column SalesValue will either contain a comma or a dot – and by checking the first value of the file imported I can select which culture to use – this can be done like this

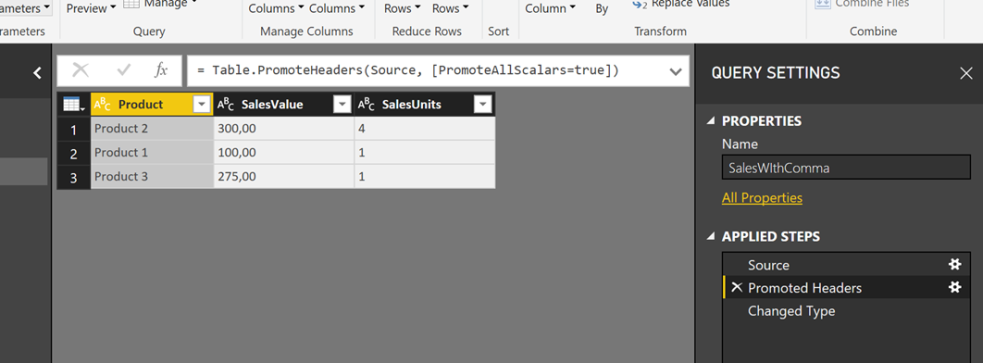

The step before we “Changed Type” is called “Promoted Headers”

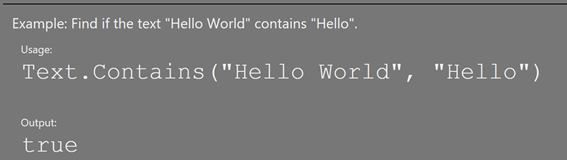

To check whether the Sales Value contains a comma – we can use the Text.Contains function

And we can refer to the first value in the SalesValue column like this

#”Promoted Headers”[SalesValue]{0}

Then

Text.Contains(#”Promoted Headers”[SalesValue]{0}, “,”)

Will give us true if the cell has a comma in the cell before its changed to a decimal value.

When we know this we can change the

= Table.TransformColumnTypes(#”Promoted Headers”,{{“Product”, type text}, {“SalesValue”, type number}, {“SalesUnits”, Int64.Type}}, “da-DK”)

To

= Table.TransformColumnTypes(#”Promoted Headers”,{{“Product”, type text}, {“SalesValue”, type number}, {“SalesUnits”, Int64.Type}}, if Text.Contains(#”Promoted Headers”[SalesValue]{0}, “,”) then “da-DK” else “en-US”)

The if statement will then use the da-DK culture if the SalesValue contains a comma.

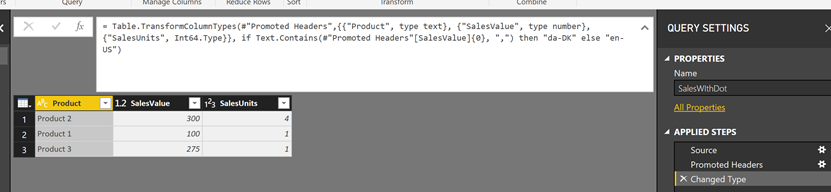

And in the example file with the dot

You can download an example file here.

Hope you find this useful – Power On!

Pingback: Power BI Report Background, Power Query, updates and more... (July 9, 2018) | Guy in a Cube

Erik, many thanks!

Erik, excelent tip !!! many thanks from southy america…

🙂 thx

Erik, great article! Decimals was driving me crazy until I read this. Thanks a million.

😀

Thank you!!!!! This is the perfect solution

Glad to hear it can help you 😀

Hi Erik, I spent hours to find a solutions for these weird decimals in csv files, with the most complicate operations I could image and realize also using several suggestions in Internet… till I am arrived to your “very simple” and effective solution… really many thanks, Giulio (Italy)

Hi Giulio – So glad to hear that /Erik 🙂

Thank you Erik,

Using Excel Power Query, select the Column, menu Transform, Data type, Decimal value, Enter, deliveres:

= Table.TransformColumnTypes(#”Previous Colum”,{{“AmountValue”, type number}, {“AmountTextWithDot”, type number}})

Adding – , “en-US” – before the last “)” will result in:

= Table.TransformColumnTypes(#”Volgorde Kolommen”,{{“ToegekendBedrag”, type number}, {“BedragDepotstorting”, type number}}, “en-US”)

…and done!

Thank you a lot.

Have a good day.

Hi

You are right – but this will not solve the challenge if one file has . and another has , as the decimal separator.

/Erik

Hi Erik,

Is there a way to run this formula for each cell in the price column? My db has both comma and dot decimals on the same file.

Thanks!

Hi

I am sure there is 🙂 – could you provide me some sample data and I will give it a try

Best

Erik

It works flawlessly. Thank you, Erik, that’s a great service!

This is the best post I have ever found on the internet : jij wint het internet.

The localization of excel drives me totally insane.

Good to hear 🙂