Inspired by Darrens Gosbell’s excellent blog post – Automatically generating measure descriptions for Power BI and Analysis Services with ChatGPT and Tabular Editor – Random Procrastination (gosbell.com) – and my session at SQLBits 2023 where I showed how to call the chatGPT from within Power Query and how you can use the New chat (openai.com) – to describe your M code.

I thought it would be nice if I could add this as a description to my tables in Power BI model.

How hard can that be 🙂

Well, it turns out that we are not allowed to modify the table object via the Tabular #ditor and this includes adding a description to the table

So, if I created a script that added a description – it worked fine but returning to the desktop I was asked to refresh the queries and the descriptions where removed by Power BI desktop –

Well, what is supported as write operations to a model?

Calculation groups is supported – hmmm… could I use this and then actually create a calculation group with a calculation item for each of the tables in my model – that would also mean that I could create a table in my report and expose the descriptions in a report page instead of the description should be hidden away on the tooltip of a table in the field list – This could actually be quite awesome!

C# Script here we go

I know very little about how to write C# code so Darrens example and the documentation of Tabular editor was a big help and please bear in mind that I am a C# novice when reading my code

The steps needed to this is as follows (I have used Tabular Editor 3 but it should be possible to us the free version of Tabular Editor as well

Step 1 – Open your Power BI desktop file and find the external tool ribbon and click the Tabular Editor

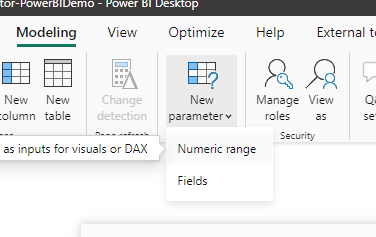

Step 2 – Add a Calculation Group called “Documentation”

Step 3 – Choose to create a New C# Script

Step 3 – Use the following script and run it

#r "System.Net.Http"

using System.Net.Http;

using System.Text;

using Newtonsoft.Json.Linq;

// You need to signin to https://platform.openai.com/ and create an API key for your profile then paste that key

// into the apiKey constant below

const string apiKey = "<YOUR_API_KEY>";

const string uri = "https://api.openai.com/v1/completions";

const string question = "Please describe this power query code for me:\n\n";

using (var client = new HttpClient()) {

client.DefaultRequestHeaders.Clear();

client.DefaultRequestHeaders.Add("Authorization", "Bearer " + apiKey);

foreach (var t in Model.Tables)

{

foreach ( var p in t.Partitions)

{

// Only uncomment the following when running from the command line or the script will

// show a popup after each measure

//

//var body = new requestBody() { prompt = question + m.Expression };

string _type = Convert.ToString(p.SourceType);

string _exp = Convert.ToString(p.Expression);

if ( _type == "M" )

{var body =

"{ \"prompt\": " + JsonConvert.SerializeObject( question + p.Expression ) +

",\"model\": \"text-davinci-003\" " +

",\"temperature\": 1 " +

",\"max_tokens\": 256 " +

",\"stop\": \".\" }";

var res = client.PostAsync(uri, new StringContent(body, Encoding.UTF8,"application/json"));

//res.Result.EnsureSuccessStatusCode();

var result = res.Result.Content.ReadAsStringAsync().Result;

var obj = JObject.Parse(result);

var desc = obj["choices"][0]["text"].ToString().Trim();

//Reference to your calculation group that should hold the calculation Items

var x =(Model.Tables["Documentation"] as CalculationGroupTable).CalculationItems[t.Name];

//deletes the old version

x.Delete();

var calculationItem1 = (Model.Tables["Documentation"] as CalculationGroupTable).AddCalculationItem();

//removes any quotes in the chatGPT description

var s = desc.Replace("\"", "");

calculationItem1.Expression = "\"" + s + "\"";

calculationItem1.Name = t.Name;

//Info("Processing " + t.Name) ;

}

}

}

}

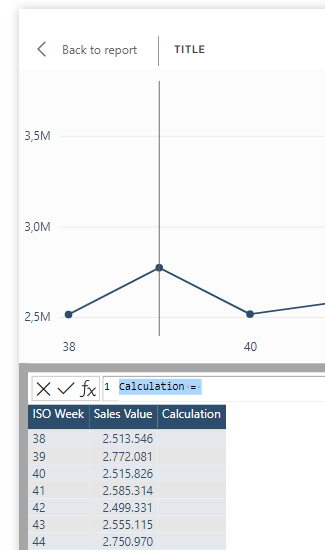

This will create a calculation item for each of your Power Query table and add a Expression that contains the chatGPT description of your M code

Example of one of the tables

Step 4 – Save the changes back to your model and you will be prompted to refresh your calculation group

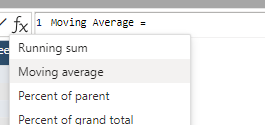

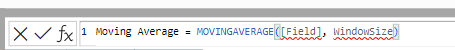

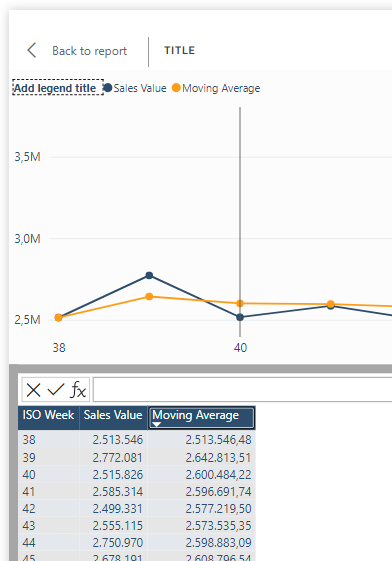

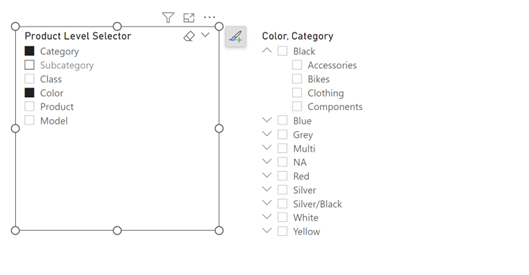

Step 5 – Add a table where you take the Calculation group name and a measure that I call Query description – the value of this measure will in the table be changed to the expression of the calculation item.

We could use the same method to document our DAX measures and put that into a calculation group as well – thereby documenting our full model and exposing the information directly in the report and not just in the tooltips of the fields.

I will try to find time to do a blog post on this as well.

Let me know in the comments if you find this useful – would very much like to hear from you.